Contents

Key Takeaways

TL;DR

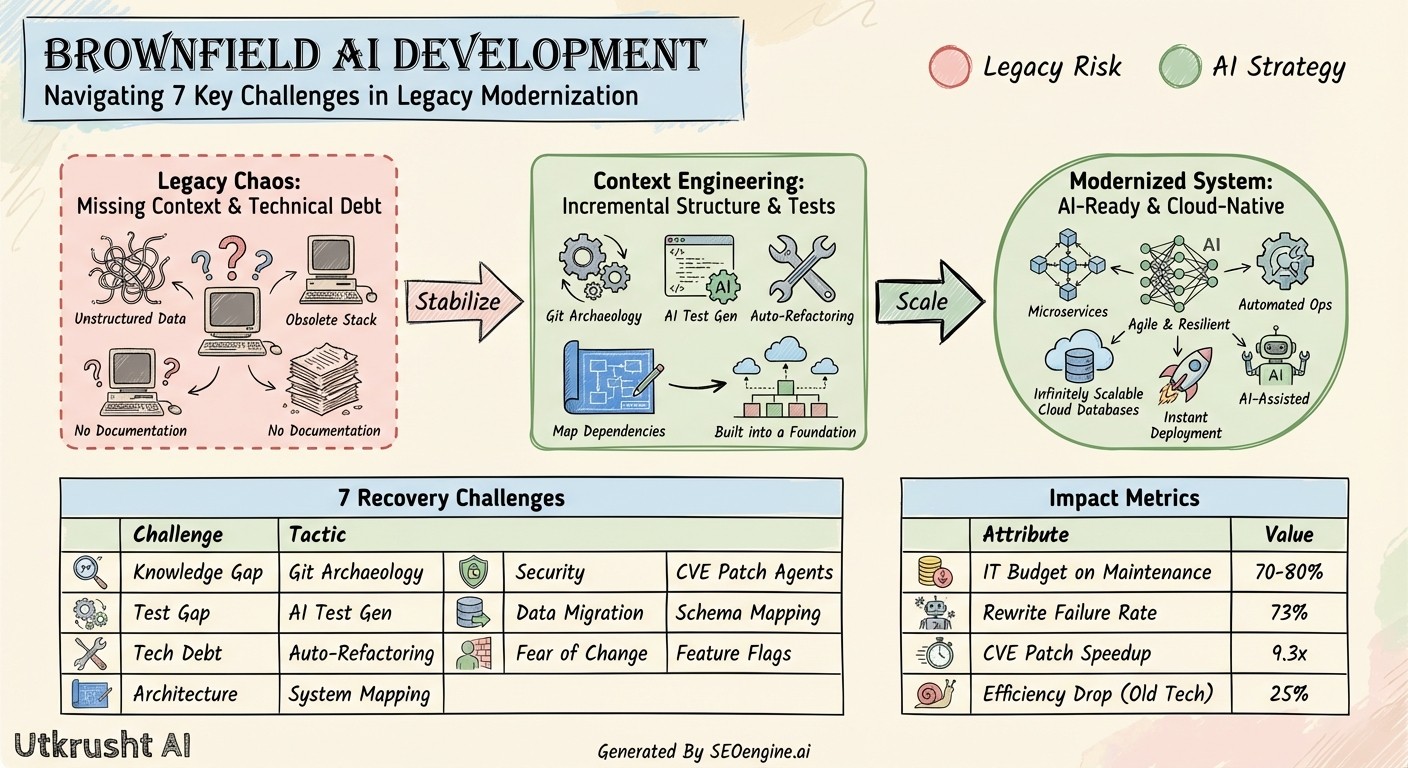

Brownfield codebases present unique challenges that can slow development and frustrate engineering teams. Research shows teams spend up to 75% of their time maintaining existing code rather than building new features.

This guide explores seven critical challenges, from technical debt accumulation to knowledge gaps, and provides actionable strategies to tackle each one, helping your team transform legacy systems into competitive advantages.

Key Takeaways:

Address technical debt incrementally rather than through massive rewrites, allocating 15-25% of sprint capacity to quality improvements

Create comprehensive documentation as code alongside the system itself, capturing architectural decisions and business logic explicitly

Update dependencies regularly through phased approaches that minimize risk while maintaining security

Build testing infrastructure gradually, starting with critical user journeys and growing coverage organically

Assess and hire developers specifically for brownfield capabilities using realistic code scenarios rather than algorithm tests

Establish clear ownership, visibility, and accountability for system health to prevent quality erosion

Balance stakeholder expectations by translating technical challenges into business impact and demonstrating continuous improvement

Understanding Brownfield Codebases and Their Impact

A brownfield codebase refers to existing software systems that have accumulated code, dependencies, and technical decisions over years of development. Unlike greenfield projects that start fresh, brownfield environments carry the weight of past choices, outdated technologies, and undocumented assumptions.

For CEOs of custom software development companies, brownfield projects represent both opportunity and risk. Studies indicate that 80% of software maintenance costs stem from legacy code challenges.

When your team inherits or maintains brownfield systems, the ability to identify and overcome specific obstacles directly impacts project profitability and client satisfaction.

The distinction matters because brownfield work requires a different skill set than greenfield development. Developers must read and understand existing code, make changes without breaking functionality, and balance new feature development against maintenance needs.

This reality is precisely why platforms like Utkrusht AI have built their assessment methodology around real-job simulations that include debugging production code, refactoring legacy systems, and optimizing existing databases, recognizing that brownfield success demands fundamentally different capabilities than algorithm-solving or theoretical knowledge.

Challenge 1: Navigating Accumulated Technical Debt

Technical debt accumulates when teams take shortcuts or make expedient decisions that sacrifice long-term code quality for short-term delivery speed. In brownfield codebases, this debt compounds over months and years.

What makes technical debt particularly challenging?

The impact extends beyond code quality. Technical debt slows feature development by 23-40% according to industry benchmarks. Each new change requires working around previous shortcuts, creating a cascading effect that increases project timelines and costs.

Common manifestations include:

Hardcoded values scattered throughout the application

Duplicated business logic across multiple modules

Inconsistent naming conventions and code styles

Missing or outdated unit tests

Workarounds for underlying architectural problems

Tackling the challenge:

Start by quantifying the debt through code quality metrics. Tools that measure cyclomatic complexity, code coverage, and duplication provide objective baselines. Prioritize debt reduction based on business impact, focusing on areas with the highest change frequency or customer-facing functionality.

Implement a "boy scout rule" where developers leave code slightly better than they found it. Rather than massive refactoring projects that stall feature development, integrate incremental improvements into regular sprint work. Allocate 15-20% of sprint capacity specifically for technical debt reduction.

Create visibility by adding technical debt items to your backlog with estimated costs. When stakeholders see the cumulative impact on development velocity, they understand why addressing debt delivers business value.

How do you prevent new debt accumulation?

Establish clear coding standards and enforce them through automated linting and code review processes. Define "done" to include documentation, tests, and adherence to architectural patterns. Make quality gates non-negotiable before code reaches production.

Challenge 2: Dealing with Documentation Gaps and Knowledge Silos

Brownfield codebases frequently suffer from incomplete or outdated documentation. Original developers move on, taking institutional knowledge with them. The code becomes the only source of truth, but deciphering it requires significant time investment.

Research shows that developers spend 35-50% of their time trying to understand code before they can modify it. Knowledge silos emerge when specific individuals hold critical understanding about system components, creating bottlenecks and single points of failure.

What happens when documentation is missing?

Teams make incorrect assumptions about how systems work. They implement workarounds instead of proper solutions. Development time increases as engineers reverse-engineer functionality from code. Bugs emerge because developers misunderstand dependencies and side effects.

Adopt "documentation as code" practices where documentation lives alongside the codebase in version control. Write README files for each module explaining purpose, dependencies, and key decisions. Document the "why" behind architectural choices, not just the "what" of implementation.

Implement lightweight Architecture Decision Records (ADRs) that capture important technical decisions in short, structured documents. Each ADR explains the context, decision made, and consequences, creating a historical record of system evolution.

Schedule regular knowledge-sharing sessions where team members present specific system components. Record these sessions for future reference. Rotate developers across different modules to spread knowledge and prevent silos.

Use tools that generate documentation from code comments and structure. Keep this documentation updated through continuous integration checks that fail when code changes lack corresponding documentation updates.

Specific actions to take:

Create a living system diagram showing component relationships

Document deployment procedures with step-by-step runbooks

Maintain an FAQ document answering common questions new developers ask

Record video walkthroughs of critical system flows

Establish a mentorship program pairing experienced and new team members

Challenge 3: Managing Outdated Dependencies and Technology Stack

Technology evolves rapidly. Brownfield codebases often rely on outdated frameworks, libraries, and languages that no longer receive security updates or community support. This creates security vulnerabilities and makes it harder to attract developers familiar with modern tools.

Statistics show that 85% of commercial applications contain open-source components, and 84% of codebases have at least one known vulnerability. Outdated dependencies multiply these risks.

Why is updating dependencies so risky?

Breaking changes in newer versions can cascade through the system. Tests may not provide adequate coverage to catch regressions. The effort required for updates competes with feature development priorities. Companies delay updates until forced by security incidents or compatibility issues.

Create a complete inventory of all dependencies, their current versions, and latest available versions. Identify which dependencies have security vulnerabilities or lack active maintenance. Prioritize updates based on security risk, business criticality, and update complexity.

Adopt a phased update strategy. Update dependencies incrementally rather than attempting wholesale technology stack replacements. Start with minor version updates that minimize breaking changes. Expand test coverage before major updates to catch regressions quickly.

Establish a regular update cadence, quarterly reviews of dependency status with monthly security patch applications. Automate dependency update notifications and security scanning through continuous integration pipelines.

When frameworks reach end-of-life, plan migration paths 12-18 months in advance. Break large migrations into discrete phases that deliver incremental value. This prevents "big bang" rewrites that often fail or drag on for years.

Risk Factor | Outdated Stack | Updated Stack | Migration In Progress |

|---|---|---|---|

Security Vulnerabilities | High (known exploits) | Low (patched) | Medium (mixed versions) |

Developer Availability | Limited (niche skills) | Abundant (current tech) | Variable (needs both) |

Performance Issues | Common (unoptimized) | Rare (modern optimizations) | May increase initially |

Integration Challenges | High (compatibility) | Low (standard APIs) | High (dual systems) |

Maintenance Cost | High (specialized) | Low (community support) | Highest (double effort) |

[LINK: dependency management best practices]

Challenge 4: Understanding Complex and Undocumented Business Logic

Business rules embedded in brownfield code often reflect years of accumulated requirements, special cases, and organizational knowledge. Without clear documentation, this logic becomes opaque and difficult to modify safely.

What makes business logic particularly challenging in legacy systems?

The logic scatters across multiple layers, database stored procedures, application code, and even front-end validation. Conditional statements nest deeply, handling edge cases that may no longer apply. Comments are sparse or misleading, written years ago by developers no longer with the company.

Working with custom software development teams, understanding complex business logic represents the steepest learning curve for new developers. They can learn syntax and frameworks quickly, but domain knowledge requires months of immersion.

Extract business rules into dedicated service layers or domain models using Domain-Driven Design principles. This consolidates logic into discoverable locations and reduces duplication. Create unit tests that document expected behavior through concrete examples.

Interview subject matter experts to document business rules explicitly. Convert their explanations into decision tables or flowcharts that developers can reference. These visual representations clarify complex conditional logic better than code comments.

Implement feature flags for changes to critical business logic. This allows rolling back problematic changes quickly without full deployments. Feature flags also enable A/B testing of business rule modifications, validating changes with real user data before full rollout.

Refactor complex conditional logic into strategy patterns or rules engines. This makes each rule explicit and testable in isolation. Modern rules engines even allow business analysts to modify logic without developer involvement.

Practical steps include:

Map existing business flows from end to end

Identify areas where business logic duplicates across the codebase

Create a glossary defining domain terminology consistently

Write characterization tests that document current behavior before changes

Establish regular touchpoints with business stakeholders

Challenge 5: Navigating Poor Code Structure and Architecture

Architectural problems in brownfield codebases manifest as tight coupling, unclear separation of concerns, and violation of design principles. The system evolves organically without coherent architectural vision, creating a "big ball of mud" that resists change.

Code structure issues include circular dependencies, God objects with thousands of lines, and inconsistent layering where data access mixes with business logic and presentation code. These problems make testing difficult and changes risky.

How does poor architecture slow development?

Changes in one area unexpectedly break functionality elsewhere. Adding features requires modifying numerous files across the system. Unit testing becomes nearly impossible due to tight coupling. Development estimates increase as engineers account for unintended consequences.

Apply the Strangler Fig pattern to gradually replace poor architecture. Build new functionality with proper structure while maintaining the old system. Over time, new code strangles the old, eventually allowing its removal. This approach delivers incremental value while improving architecture.

Identify architectural boundaries that should exist and introduce them gradually. Create interfaces between layers even if initial implementations simply delegate to existing code. These interfaces establish contracts that enable future refactoring behind stable APIs.

Measure architectural quality through metrics like afferent and efferent coupling, instability, and abstractness. Track these metrics over time to verify that architectural improvements have lasting impact. Set quality gates that prevent new code from violating architectural standards.

Just as Utkrusht AI emphasizes evaluating developers through real-world refactoring scenarios rather than theoretical exercises, your hiring process should prioritize candidates who demonstrate pragmatic architectural improvement skills. Engineers who excel at working within constraints and improving systems incrementally deliver far more value in brownfield environments than those who demand greenfield perfection.

Architectural Issue | Impact on Development | Refactoring Strategy | Expected Timeline |

|---|---|---|---|

Tight Coupling | Changes cascade unpredictably | Introduce interfaces and abstractions | 3-6 months per module |

God Objects | Single points of failure | Extract responsibilities into focused classes | 2-4 weeks per object |

Circular Dependencies | Build order problems | Introduce dependency inversion | 1-2 months per cycle |

Mixed Concerns | Difficult testing | Separate layers with clear boundaries | 4-8 months for full system |

Inconsistent Patterns | Cognitive overhead | Standardize on common patterns | Ongoing, 6-12 months |

Challenge 6: Overcoming Testing Gaps and Quality Assurance Issues

Brownfield codebases typically lack comprehensive automated testing. Manual testing predominates, creating bottlenecks and allowing regressions to reach production. Code coverage hovers below 20%, leaving most functionality untested.

Studies indicate that teams with less than 40% code coverage spend 50% more time fixing production bugs. Without tests, developers fear making changes because they cannot verify that existing functionality remains intact.

Why is adding tests to existing code so difficult?

The code was not written with testability in mind. Dependencies couple tightly, making isolation impossible. Tests require extensive setup of database state, external services, and complex object graphs. Engineers face pressure to deliver features rather than spend time on testing infrastructure.

Start with end-to-end integration tests that verify critical user journeys. These provide immediate value by catching major regressions, even if they run slowly. Focus on high-value workflows that users depend on most.

Add tests before fixing bugs using Test-Driven Development principles. Write a failing test that reproduces the bug, fix the code to make the test pass, then refactor. This grows test coverage organically while preventing regression of known issues.

Introduce seams into tightly coupled code to enable unit testing. Dependency injection containers allow swapping real implementations with test doubles. Extract interfaces for external dependencies like databases and APIs.

Set a coverage ratchet that prevents coverage from decreasing. New code must include tests, and coverage must increase over time. Make test writing part of "done" criteria for all stories.

Invest in test infrastructure and tooling. Fast test execution encourages frequent test runs. Clear test failure messages help developers quickly identify problems. Shared test fixtures reduce setup duplication.

Testing priorities for brownfield systems:

Critical business transactions (payment processing, order fulfillment)

Complex business logic with many conditionals

Areas with frequent bug reports

Recently modified code (regression prevention)

Integration points with external systems

Challenge 7: Addressing Team Knowledge Gaps and Skill Mismatches

The final challenge stems from people rather than code. Teams working on brownfield codebases need developers comfortable with ambiguity, skilled at reading unfamiliar code, and experienced with gradual refactoring. These skills differ significantly from greenfield development.

Many developers prefer working on new projects with modern technologies. Brownfield work requires patience, detective skills, and pragmatism. Finding developers with this mindset, or developing it within existing teams, presents a significant challenge.

What skills separate effective brownfield developers from others?

Strong brownfield developers excel at reading and understanding existing code quickly. They make pragmatic tradeoffs between ideal solutions and practical improvements. They communicate effectively about technical constraints and refactoring costs. They balance new feature delivery with quality improvements.

Traditional hiring processes fail to identify these capabilities. Resumes and algorithm tests reveal little about a candidate's ability to work effectively in complex, messy codebases.

This challenge is why Utkrusht AI built their platform around 20-minute real-job simulations where candidates debug APIs, optimize slow database queries, and refactor production code, providing hiring managers concrete evidence of how candidates think and work in authentic brownfield scenarios before interviews even begin.

Assess candidates specifically for brownfield capabilities. Present them with existing code containing bugs or performance issues and evaluate their approach to diagnosis and resolution. This reveals how they think through unfamiliar systems and make changes safely.

Provide comprehensive onboarding for new team members. Pair them with experienced developers for the first month. Create a structured learning path covering system architecture, deployment procedures, and common problem areas. Set clear expectations that becoming productive takes time.

Invest in training existing team members on refactoring techniques, design patterns, and working with legacy code. Books like "Working Effectively with Legacy Code" by Michael Feathers provide valuable frameworks. Hands-on workshops where teams refactor actual production code together build shared understanding.

Rotate developers across different modules to spread knowledge and prevent expertise silos. This also makes work more interesting for team members who might otherwise feel stuck maintaining the same code indefinitely.

Recognize and reward brownfield work appropriately. Developers who improve system stability, reduce technical debt, and enable faster feature delivery provide immense business value even when their work is less visible than new features.

Skill Category | Greenfield Priority | Brownfield Priority | Development Strategy |

|---|---|---|---|

Reading Unfamiliar Code | Low | Critical | Code reading exercises, pair programming |

Refactoring Techniques | Medium | Critical | Structured training, mentored practice |

Debugging Complex Systems | Medium | Critical | Real scenario practice, post-mortems |

Making Pragmatic Tradeoffs | Low | High | Business context training, decision frameworks |

Communication about Constraints | Medium | High | Stakeholder interaction practice |

Key Strategies for Brownfield Success

Successfully managing brownfield codebases requires combining technical approaches with organizational discipline. Here are essential strategies that work across all seven challenges:

Establish clear ownership and accountability. Assign specific individuals or teams as owners for each major system component. Owners maintain documentation, review changes, and guide architectural decisions. This prevents the "tragedy of the commons" where no one feels responsible for code quality.

Create visibility into system health. Implement monitoring, logging, and alerting that surfaces problems quickly. Track key metrics like deployment frequency, lead time for changes, mean time to recovery, and change failure rate. Dashboard visibility drives improvement by making problems impossible to ignore.

Build strong relationships with stakeholders. Help business partners understand the cost of technical debt and the value of refactoring investments. Translate technical challenges into business terms like reduced time-to-market, lower maintenance costs, and decreased risk of outages.

Balance maintenance and new development. Allocate sprint capacity explicitly between new features and system improvements. A common ratio is 70% new features, 20% technical debt reduction, and 10% learning and process improvement. Adjust based on current system health.

Celebrate improvement wins. When teams reduce deployment time, eliminate a class of bugs, or successfully migrate to updated frameworks, recognize these achievements. This reinforces that brownfield work delivers real value.

Frequently Asked Questions

How long does it take to improve a brownfield codebase?

Meaningful improvement typically takes 3-6 months of consistent effort for focused areas. Complete system transformation requires 12-24 months depending on codebase size and team capacity. The key is steady, incremental progress rather than attempting full rewrites that rarely succeed.

Should we rewrite the system or refactor incrementally?

Incremental refactoring succeeds far more often than complete rewrites. Research shows that 80% of major rewrite projects fail or significantly exceed time and budget estimates.

Refactor incrementally using patterns like Strangler Fig, delivering value continuously while improving code quality. Only consider rewrites when the technology stack is completely obsolete or business requirements have fundamentally changed.

How do we convince leadership to invest in technical debt?

Quantify technical debt impact in business terms. Calculate how much development velocity has slowed due to code quality issues. Estimate the cost of production incidents caused by fragile code.

Present these as risks that threaten business objectives. Propose specific improvements with projected return on investment, showing how reduced maintenance costs and faster feature delivery justify the investment.

What percentage of developer time should go toward brownfield improvements?

Industry benchmarks suggest allocating 15-25% of development capacity to technical debt reduction and system improvements. Teams with severe technical debt may need 30-40% initially.

Track velocity improvements to demonstrate that quality investments enable faster feature delivery over time, making the business case for continued investment.

How do we retain developers working on brownfield projects?

Provide variety by rotating assignments between maintenance work and new development. Invest in training and skill development. Create clear career paths that recognize brownfield expertise.

Celebrate successes in improving system quality and stability. Ensure competitive compensation that reflects the specialized skills required. Most importantly, empower developers to actually fix problems they identify rather than just working around them.

What tools help manage brownfield codebases effectively?

Essential tools include static code analysis platforms like SonarQube for tracking code quality, dependency scanners like Dependabot for security updates, and architecture visualization tools like Structure101.

Comprehensive logging and monitoring with tools like Datadog or New Relic make problems visible. Version control with Git enables safe experimentation through branching. Automated testing frameworks provide regression safety nets.

How do we identify developers who excel at brownfield work?

Assess candidates using realistic scenarios that mirror actual brownfield challenges. Have them debug production code, optimize slow queries, or refactor legacy implementations while explaining their thought process. Watch for pragmatic problem-solving, ability to understand unfamiliar code quickly, and comfort with ambiguity.

Traditional coding tests and algorithm questions poorly predict brownfield success because they omit the context and constraints that make legacy work challenging.

Conclusion

Brownfield codebases present significant challenges, but they also represent opportunities to deliver outsized business impact.

The seven challenges, technical debt accumulation, documentation gaps, outdated dependencies, complex business logic, poor architecture, testing gaps, and team skill mismatches, are surmountable with systematic approaches and organizational commitment.

Remember that 75% of development time goes toward maintaining existing systems rather than building new ones. This reality makes brownfield expertise a competitive advantage for custom software development companies.

Teams that excel at understanding, improving, and extending legacy systems deliver more value to clients and complete projects more predictably than those lacking these capabilities.

The key lies in treating brownfield work as a distinct discipline requiring specific skills, tools, and processes. Rather than viewing legacy codebases as burdens, recognize them as assets containing years of business knowledge and proven functionality.

Your ability to unlock value from these systems while gradually improving their quality determines long-term project success. As platforms like Utkrusht AI demonstrate through their emphasis on real-job simulations and practical skill validation, assessing and developing capabilities that matter in authentic work environments, rather than theoretical perfection, drives actual results in brownfield contexts.

Start by assessing which of these seven challenges most impacts your current projects. Select one area for improvement, implement the strategies outlined, and measure results over the next quarter.

Small, consistent improvements compound into transformative change.

Founder, Utkrusht AI

Ex. Euler Motors, Oracle, Microsoft. 12+ years as Engineering Leader, 500+ interviews taken across US, Europe, and India

Want to hire

the best talent

with proof

of skill?

Shortlist candidates with

strong proof of skill

in just 48 hours