Tech Hiring in the Age of AI

We've been in tech long enough to watch hiring trends come and go like fashion seasons. First, everyone was obsessed with ATS-filtering mechanisms. Then came the rise of tests, MCQ-quizzes and questions. Then all about whiteboard coding.

After that came algorithm tests, and then take-home assignments. The methods keep changing, but the goal stays the same: find tech people who can actually ship quality code and build things that work.

Here's the thing—there's always been a gap between what we test for in interviews and what people actually do on the job.

Right now? That gap is bigger than ever. AI tools, ChatGPT, Claude, etc. have completely changed how we work, but most companies are still interviewing like it's 2019. It's like preparing for a horse race when everyone else has moved on to cars.

And if you're running a small engineering team or a software development shop? This problem is 10x worse.

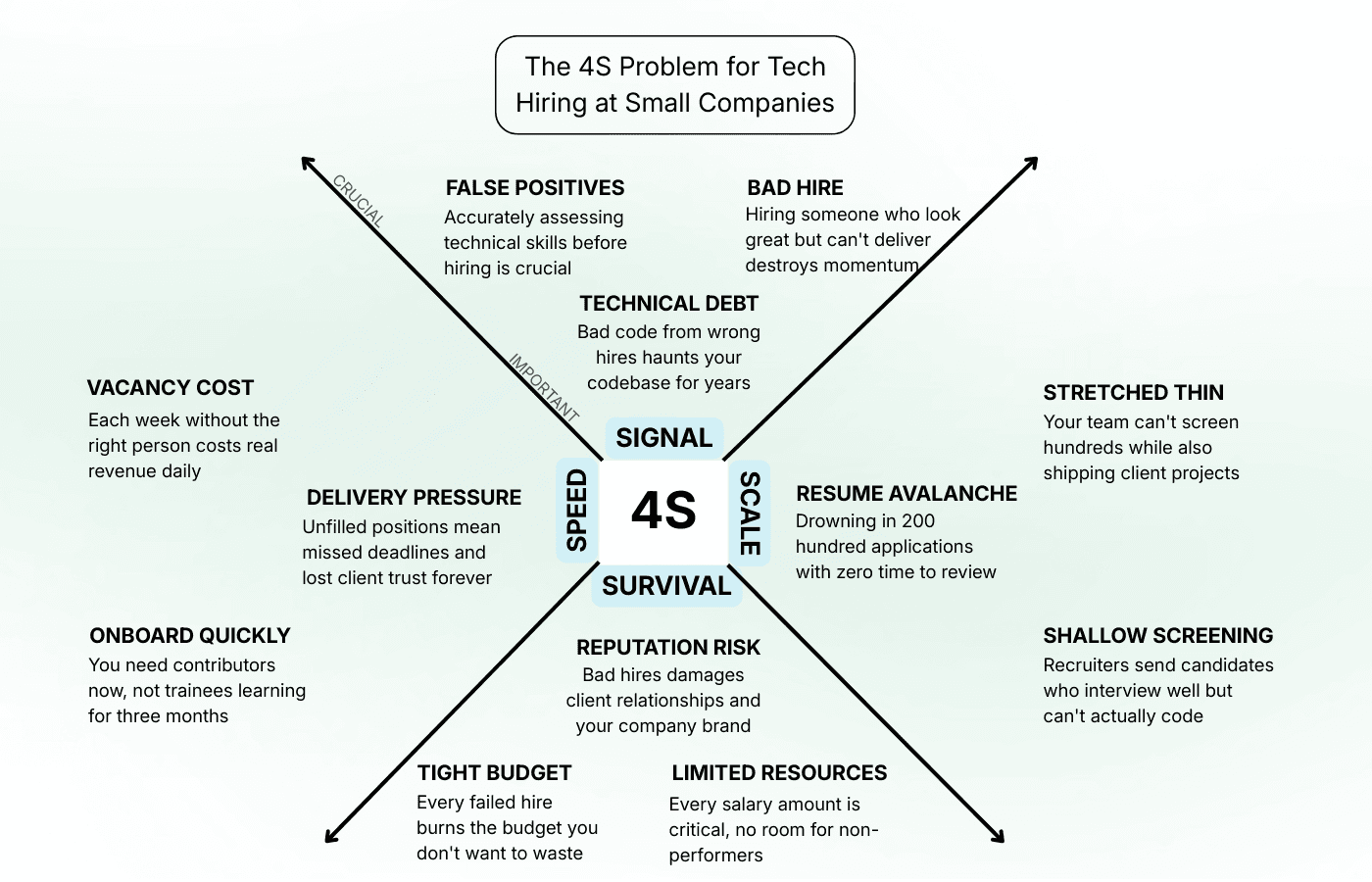

The 4S Problem for Small Engineering Teams and Custom Development Companies

Let’s paint a picture. You're running a 30-person dev shop or leading a small engineering team.

You post a job opening for a senior developer. Within 3 days, you have 200+ applications. You now have 0 idea to assess who is good, weak, bad, etc. from this pile!

These are some of the subtle, nuanced, and extreme pain points we repeatedly heard from small engineering teams. The problem isn't getting/sourcing candidates. It's how we accurately assess to find the best 5 ones from the pool.

Why Traditional Hiring Methods are (still) Failing?

We're in age of AI, yet tech hiring has the same set of problems. We've engineering background ourselves, taken 500+ tech interviews globally. The pattern is consistently same across small engineering teams.

The main reason is — companies still test candidates on completely wrong parameters. We even surveyed 100+ engineering teams and found the same pattern everywhere: the hiring methods and process today tests everything in a candidate, except the job itself.

Why Other Hiring Tools and Methods get this Wrong

We looked at about 50+ other modern AI tools in this space. Think HackerRank, Leetcode, etc.

Again, credit to them they've done a reasonably good job in standardizing processes and putting things in place, but yet don't accurately evaluate technical skills of candidates.

Here's some specific reasons for it -

They test memorized theory knowledge. We simulate your job. Other platforms focus on data structures and whiteboard problems. We drop candidates into realistic scenarios: "This API is returning 500 errors. Fix it and explain your approach."

They ask static questions. We generate infinite real-world scenarios. Candidates can't memorize answers when every assessment is dynamically generated. No more gaming the system with LeetCode 500+ problems solved and practicing it.

They waste everyone's time. We respect it. 20-minute assessments with 90%+ completion rates. Your candidates don't need to block out their entire evening. Your team doesn't need to screen 200 people to find 5 good ones.

They cover common skills. We go deep and wide. 200+ skills including the rare ones you actually need: GenAI implementation, cybersecurity protocols, embedded systems. Not just "can you reverse a linked list."

They rely on human bias. We let code speak. No resume filtering. Just pure performance on tasks that matter.

They restrict. We give full liberty. We encourage candidates to use AI tools in our assessments. Wasn’t that the point of AI? Then why not allow them to use during interviews?

Ready to hire your next candidate with proof of skill?

Utkrusht helps you reduce the hiring time significantly by providing candidates' core skill evaluations.